L-CAS is looking for another Research Assistant to work in the STRANDS project. Consider applying if you are ambitious, excited about mobile robots and long term behaviour, and looking for a great opportunity to engage in collaborative research and also pursue a PhD. We need a great communicator, robot programmer, and system integrator; with an ambition and dedication for excellent research. Read the details and apply online for this post on “Intelligent Long-term Behaviour in Mobile Robotics”.

Author: Marc Hanheide

Presentations of our new FP7 project “STRANDS” at ERF and ICRA

Tom Duckett and Marc Hanheide of the Lincoln Centre for Autonomous Systems will be presenting the general aims and objectives of the new FP7 project “STRANDS: Spatio-Temporal Representations and Activities For Cognitive Control in Long-Term Scenarios” – and L-CAS’ role in its research work plan in particular – at ICRA 2013 and ERF 13, respectively. The project’s aim is …

“… to enable a robot to achieve robust and intelligent behaviour in human environments through adaptation to, and the exploitation of, long-term experience.”

Our approach is based on understanding 3D space and how it changes over time, from milliseconds to months. Within L-CAS two post-doctoral research fellows and one PhD student will work towards this aim in the coming four years starting in April.

More job openings at L-CAS

There are a total of five vacancies at L-CAS at the moment. Opportunities include both, PhD and PostDoc positions in robotics and computer vision. Apply now!

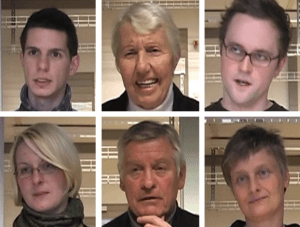

Paper on Facial Analysis for HRI accepted for ICRA 2013

Our joint paper with the CoR-Lab in Bielefeld has been accepted at ICRA 2013. Kudos go to Christian Lang for his excellent work.

Facial Communicative Signal Interpretation in Human-Robot Interaction by Discriminative Video Subsequence Selection

Facial communicative signals (FCSs) such as head gestures, eye gaze, and facial expressions can provide useful feedback in conversations between people and also in humanrobot interaction. This paper presents a pattern recognition approach for the interpretation of FCSs in terms of valence, based on the selection of discriminative subsequences in video data. These subsequences capture important temporal dynamics and are used as prototypical reference subsequences in a classification procedure based on dynamic time warping and feature extraction with active appearance models. Using this valence classification, the robot can discriminate positive from negative interaction situations and react accordingly. The approach is evaluated on a database containing videos of people interacting with a robot by teaching the names of several objects to it. The verbal answer of the robot is expected to elicit the display of spontaneous FCSs by the human tutor, which were classified in this work. The achieved classification accuracies are comparable to the average human recognition performance and outperformed our previous results on this task.

New PostDoc and PhD positions available in L-CAS

L-CAS is hiring: We are seeking to recruit two Postdoctoral Fellows and one PhD student in the context of a European collaborative project, involving six academic institutes and two industrial partners across four European countries. The project aims to enable robots to achieve robust and intelligent behaviour in human environments through adaptation to, and the exploitation of, long-term experience.

Join the L-CAS team!